I am building an updated server to play with LLMs for local home automation control using Home Assistant (https://www.home-assistant.io).

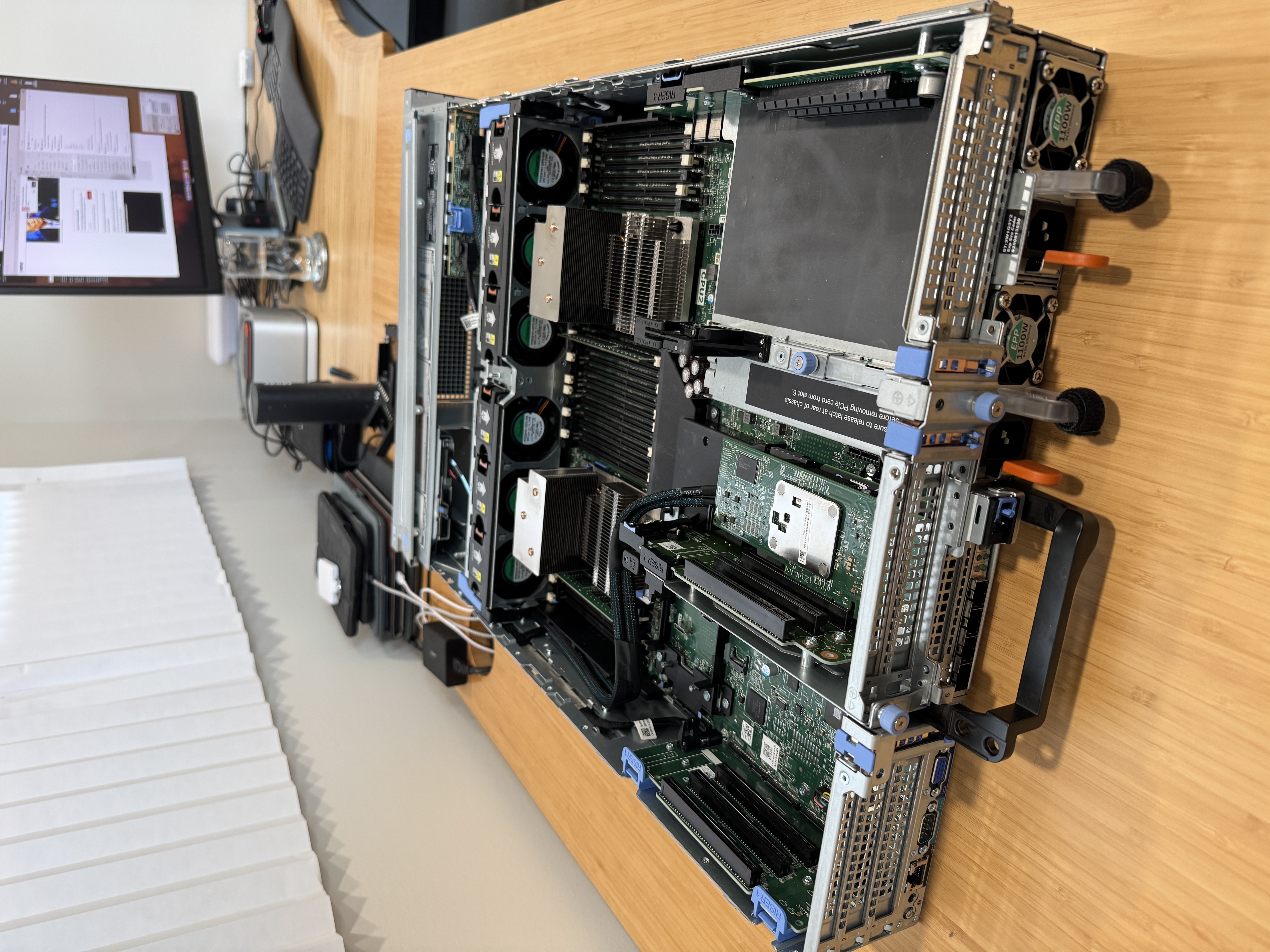

I managed to pick up a Dell R740 for a great price ($400) and with a few additional eBay purchases have a nice server capable of housing 3 GPUs. I have an existing Nvidia Tesla P100 from my other server that I am moving to this new server, and will eventually look at finding a Tesla V100 32G PCIe as they seem to have a slightly more accessible price compared to the Tesla A100 PCIe.

I will continue to run Home Assistant on my Home Assistant Yellow (PoE, Raspberry Pi CM5 8GB, NVMe SSD), but will land Ollama, and other tools on this new server.

I was banging my head against this for the 2nd time I thought it would be helpful to document the setup process for getting Ollama running on Ubuntu 24.04 along with the steps to reliably install the Nvidia drivers & CUDA packages.

Nvidia Driver install

Before you begin, ensure that your Nvidia card is showing up on the PCI bus. Run lspci | grep -i nvidia and you should get output similar to this:

af:00.0 3D controller: NVIDIA Corporation GP100GL [Tesla P100 PCIe 16GB] (rev a1)

If you have already attempted to install nvidia drivers and have failed cleanup your existing Nvidia nuggets as follows:

sudo apt-get remove --purge 'libnvidia-.*'

sudo apt-get remove --purge '^nvidia-.*'

sudo apt-get remove --purge '^libnvidia-.*'

sudo apt-get remove --purge '^cuda-.*'

sudo apt clean

sudo apt autoremove

Now install the updated Nvidia drivers:

sudo add-apt-repository ppa:graphics-drivers/ppa --yes

sudo apt-get update

update-pciids

sudo apt-get install nvidia-driver-570 -y

sudo apt-get reinstall linux-headers-$(uname -r)

sudo update-initramfs -u

Validate that the DKMS modules are actually there, and then reboot if they are:

sudo dkms status

sudo reboot

After a reboot you should be able to run nvidia-smi and get output similar to this:

root@r740:~# nvidia-smi

Wed Mar 5 14:34:02 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.86.16 Driver Version: 570.86.16 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla P100-PCIE-16GB Off | 00000000:AF:00.0 Off | 0 |

| N/A 30C P0 26W / 250W | 0MiB / 16384MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

Nvidia CUDA install

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo apt-get update

sudo apt-get install cuda-toolkit -y

sudo apt-get install nvidia-gds -y

I don't think the nvidia-gds package is required for my P100, but the official CUDA install guide has it so I'm including it here. Check that the nvidia-fs module is now available in DKMS and then reboot.

sudo dkms status

sudo reboot

After rebooting you will likely see that there are updated packages for the Nvidia drivers, you can upgrade them if you like. I found that installing the drivers from the ppa:graphics-drivers/ppa repository first had more consistent results than installing from the nvidia repos first. Your mileage may vary...

Install Docker

We will use docker to host the ollama web ui, but we will run ollama locally on the system.

sudo apt-get install curl apt-transport-https ca-certificates software-properties-common -y

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce -y

Now add your local user to the docker group sudo usermod -aG docker $USER.

Install Nvidia Container Toolkit

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

sudo apt-get install nvidia-container-toolkit -y

sudo systemctl restart docker

Install Ollama

curl -fsSL https://ollama.com/install.sh | sh

Now edit the systemd service file to allow the Ollama to listen on all network interfaces on the system instead of just localhost by adding OLLAMA_HOST=0.0.0.0:11434

Edit: /etc/systemd/system/ollama.service

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin"

Environment="OLLAMA_HOST=0.0.0.0:11434"

[Install]

WantedBy=default.target

Then run the following to apply the changes:

sudo systemctl daemon-reload

sudo systemctl restart ollama

Install Open WebUI

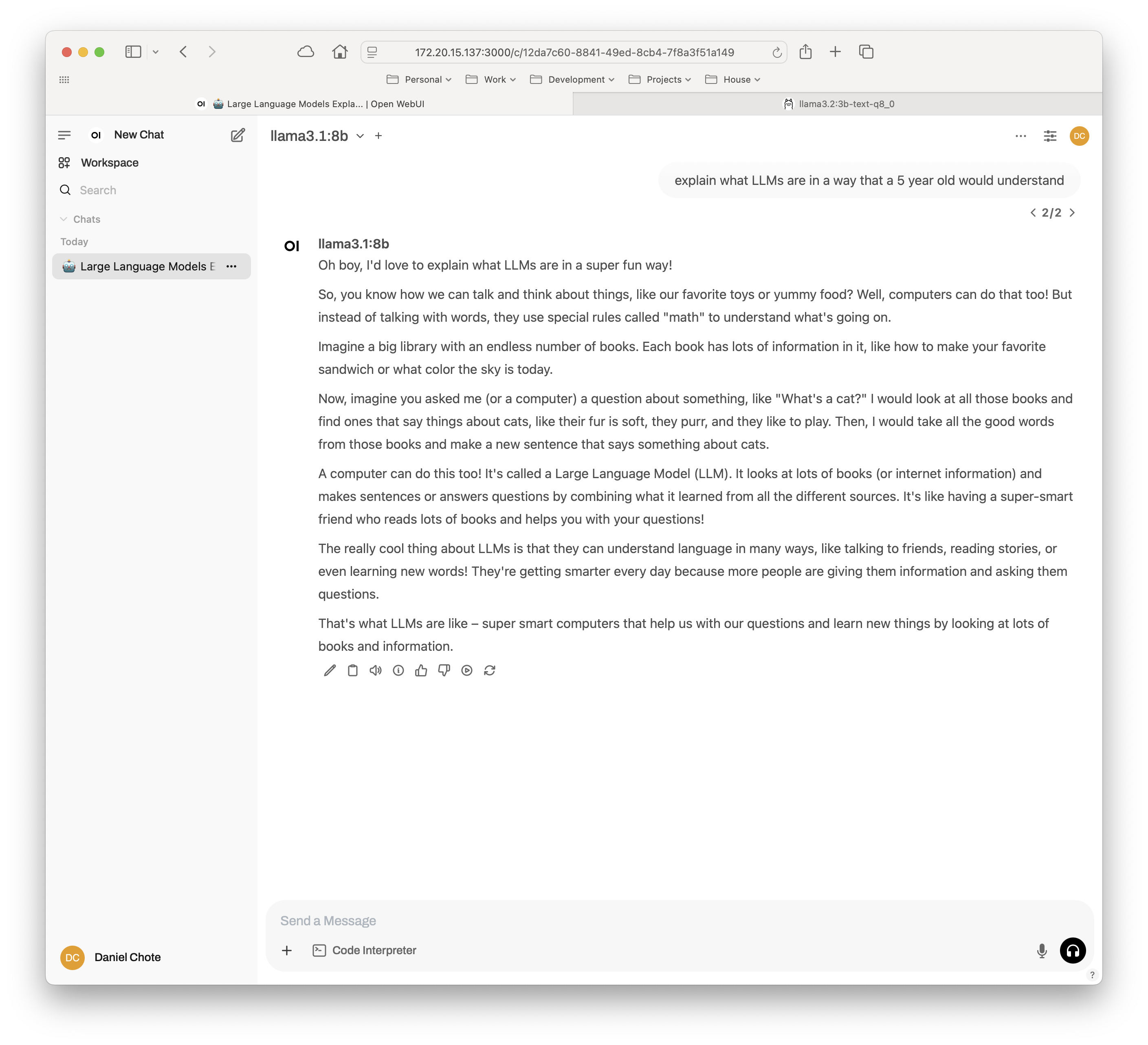

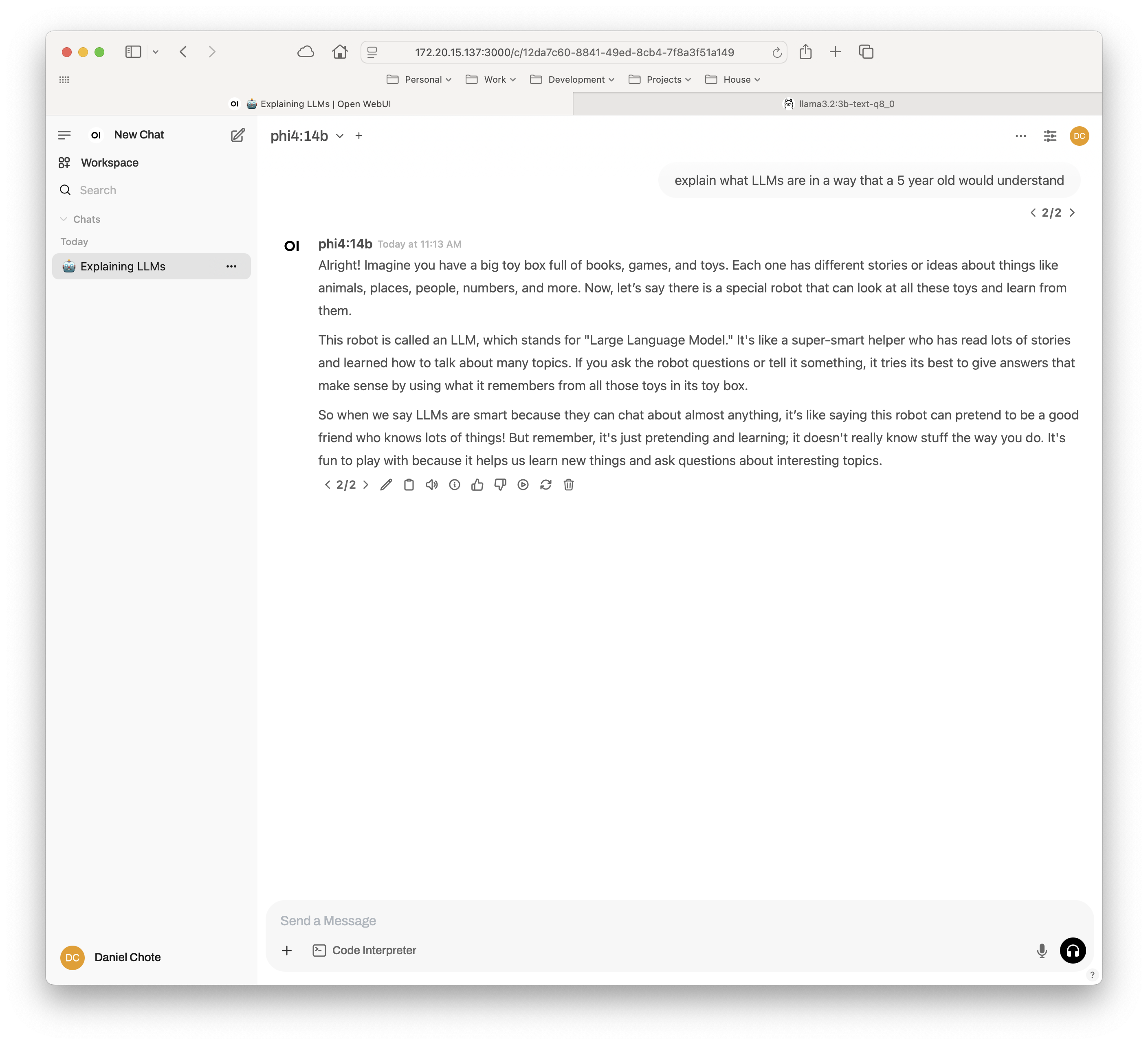

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

You should now be able to navigate to Open WebUI using your server IP and port 3000. Create your admin account and you are ready to start installing models from https://ollama.com/library.